Unleashing the creative power of machines: Exploring generative AI, large language models and the future of content creation

Written by Viktoria Koleva | 7th of June 2023

Table of Contents

Don't feel like reading? Watch our video!

For as long as humans have been around, we have been harnessing the power of nature to create tools that make our lives easier and more productive – to innovate. Inspired by and mimicking the wonders of nature, we have created art, architecture, and literature that take us on a journey toward a higher understanding of ourselves. Now, we take this knowledge and empower others to search for patterns, sequences, and meaning – machines. All in our never-ending quest for innovation.

The boundaries between human creativity and machine innovation have long been blurred. Where the human mind faces a challenge, the machine offers an escape route. Where the machine faces its limits, finding itself on a blind alley, the human draws a new path. One such example is generative AI, a type of artificial intelligence technology that can generate new and original content such as text, images, videos, sounds, and 3D models based on the input it was trained on. At the forefront of this innovation stands GPT (generative pre-trained transformer), an advanced language model powering the AI creation of content.

In this blog, we discuss how human ingenuity and machine intelligence intersect, fueling the next technological revolution and reshaping how we think, create, and experience content for years to come.

The boundaries between human creativity and machine innovation have long been blurred. Where the human mind faces a challenge, the machine offers an escape route. Where the machine faces its limits, finding itself on a blind alley, the human draws a new path. One such example is generative AI, a type of artificial intelligence technology that can generate new and original content such as text, images, videos, sounds, and 3D models based on the input it was trained on. At the forefront of this innovation stands GPT (generative pre-trained transformer), an advanced language model powering the AI creation of content.

In this blog, we discuss how human ingenuity and machine intelligence intersect, fueling the next technological revolution and reshaping how we think, create, and experience content for years to come.

1. Generative AI explained

At this point, almost everyone has heard of, read, or watched something about Generative AI, OpenAI, and ChatGPT. Did you ever feel overwhelmed by the numerous blog articles and videos on what generative AI is? That’s because you have started your AI itinerary from a later stop and missed some important milestones that will give context to your journey. So, let’s take a step back and dive into artificial intelligence and machine learning first.

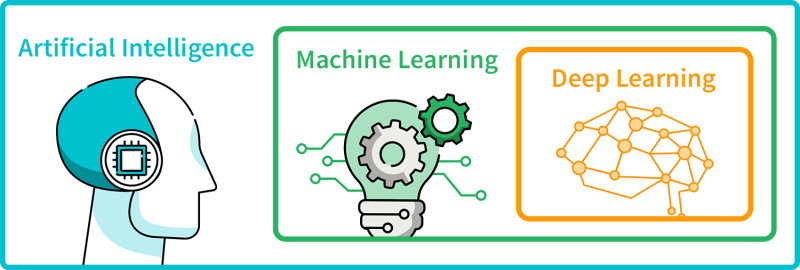

1.1 Artificial intelligence vs. machine learning

Artificial intelligence (AI) is a discipline in computer science that focuses on developing intelligent agents – systems capable of autonomous reasoning, learning, and action. AI is all about building computer systems that think and act like humans. Machine learning (ML), on the other hand, is a subfield of artificial intelligence that focuses on the development and use of self-learning algorithms that derive knowledge from data to predict outcomes. ML algorithms enable computers to automatically learn and improve from experience or data without explicit instructions for each specific task. Instead, they can recognize patterns and make inferences based on the input data they receive.

Two of the most common classes of ML learning models are supervised and unsupervised ML models. Supervised learning uses labeled datasets to train algorithms to classify and predict outcomes. Labeled data comes with a tag like a name, type, or number. In supervised learning, the algorithm uses past examples to predict future values. Based on the input values, the model outputs a prediction and compares that prediction (predicted output) to the training data used for this model (expected output). If the predicted data values and the training data values are far from each other, an error occurs. The model thus tries to reduce the error until the predicted and expected (actual vs. desired) outcomes are achieved. Unsupervised learning, as the name suggests, uses unlabeled datasets and is all about working with raw data and discovering whether it naturally falls into groups or classes with common features. A third type of ML learning model is reinforcement machine learning. Reinforcement machine learning is similar to supervised learning, but the algorithm isn’t trained using sample data. Instead, this model learns through trial and error, meaning that feedback (error occurrence) is not provided after every comparison (predicted vs. expected) but after reaching the goal state.

Two of the most common classes of ML learning models are supervised and unsupervised ML models. Supervised learning uses labeled datasets to train algorithms to classify and predict outcomes. Labeled data comes with a tag like a name, type, or number. In supervised learning, the algorithm uses past examples to predict future values. Based on the input values, the model outputs a prediction and compares that prediction (predicted output) to the training data used for this model (expected output). If the predicted data values and the training data values are far from each other, an error occurs. The model thus tries to reduce the error until the predicted and expected (actual vs. desired) outcomes are achieved. Unsupervised learning, as the name suggests, uses unlabeled datasets and is all about working with raw data and discovering whether it naturally falls into groups or classes with common features. A third type of ML learning model is reinforcement machine learning. Reinforcement machine learning is similar to supervised learning, but the algorithm isn’t trained using sample data. Instead, this model learns through trial and error, meaning that feedback (error occurrence) is not provided after every comparison (predicted vs. expected) but after reaching the goal state.

1.2 Where deep learning comes into play?

Now that we have defined artificial intelligence and machine learning, let’s dive into deep learning and its applications for Generative AI. Deep learning is a subset of machine learning that uses Artificial Neural Networks (ANN) that can process more complex patterns than machine learning. Neural networks are inspired by the human brain and attempt to simulate its behavior. They consist of numerous interconnected nodes or neurons that learn to perform tasks by processing data and making predictions. So, how does deep learning differ from classical machine learning, you might ask? By the type of data it works with and by the way it learns on it. While machine learning algorithms use structured labeled data that requires human analysis and pre-processing, deep learning algorithms can also process unstructured data automating the feature definition process and removing some of the dependency on human input. This means that neural networks can use both labeled and unlabeled data. This learning model is called semi-supervised learning. In semi-supervised learning, neural networks are trained on a small amount of labeled data and a large amount of unlabeled data. By analyzing the labeled data, the neural network learns the basics of the task. The unlabeled data, on the other hand, helps the neural network to generalize to new examples.

1.3 Connecting the dots – Generative AI defined

Generative AI is a subset of deep learning using artificial neural networks processing both labeled and unlabeled data while using supervised, unsupervised, and semi-supervised learning methods. Simply put, Generative AI is a class of algorithms designed to generate new content (statistically probable outputs) based on the raw data they have been trained on.

Generative AI has grown tremendously in recent years. Three fundamental algorithms have shaped Generative AI into what we witness today.

Generative AI has grown tremendously in recent years. Three fundamental algorithms have shaped Generative AI into what we witness today.

a) Generative Adversarial Networks (GANs) are a type of generative model trained to create new data instances that resemble the training data. GANs have two components – the generator and the discriminator. The generator creates new data samples that become a negative example for the discriminator, which learns to distinguish the generated fake data from the real data. The discriminator penalizes the generator for producing dubious results, and so the model learns.

b) Variational Autoencoders (VAEs) are a type of generative model capable of learning compact representations of input data. VAEs consist of two main elements – encoder and decoder. Let’s define encoder as the process that produces a new data representation from the old data representation. Decoder is the reverse process. Another important term here is dimensionality reduction which is to be interpreted as data compression, where the encoder compresses the data (from the initial space to the encoded space, also called latent space), while the decoder decompresses it. Depending on the initial data distribution, the encoder definition, and the latent space, some information can be lost, and its recovery might not be possible. Once trained, VAEs can generate new data samples by randomly sampling points from the learned latent space distribution and decoding them using the decoder network. This property makes VAEs useful for tasks such as data synthesis, anomaly detection, and dimensionality reduction.

c) Transformer networks are a type of neural network that can work with sequential data, such as natural language. Their key innovative component is their self-attention mechanism. While traditional neural networks process input data sequentially, transformers can process data in parallel. The self-attention mechanism allows the model to determine the importance of different input elements (words or tokens) when making predictions while considering their relationship within the context.

b) Variational Autoencoders (VAEs) are a type of generative model capable of learning compact representations of input data. VAEs consist of two main elements – encoder and decoder. Let’s define encoder as the process that produces a new data representation from the old data representation. Decoder is the reverse process. Another important term here is dimensionality reduction which is to be interpreted as data compression, where the encoder compresses the data (from the initial space to the encoded space, also called latent space), while the decoder decompresses it. Depending on the initial data distribution, the encoder definition, and the latent space, some information can be lost, and its recovery might not be possible. Once trained, VAEs can generate new data samples by randomly sampling points from the learned latent space distribution and decoding them using the decoder network. This property makes VAEs useful for tasks such as data synthesis, anomaly detection, and dimensionality reduction.

c) Transformer networks are a type of neural network that can work with sequential data, such as natural language. Their key innovative component is their self-attention mechanism. While traditional neural networks process input data sequentially, transformers can process data in parallel. The self-attention mechanism allows the model to determine the importance of different input elements (words or tokens) when making predictions while considering their relationship within the context.

Generative AI models are built using various models and frameworks such as Deep Learning Frameworks, Markov Chain Monte Carlo (MCMC), Reinforcement Learning, and Large Language Models (read more on that in the next section).

To summarize, Generative AI is a subfield of deep learning that focuses on creating new content, such as text, images, audio, and video, using different algorithms that are trained on a specific dataset. The algorithms then use that knowledge to generate new, unseen before samples that are not identical to but have the same characteristics as the input data.

To summarize, Generative AI is a subfield of deep learning that focuses on creating new content, such as text, images, audio, and video, using different algorithms that are trained on a specific dataset. The algorithms then use that knowledge to generate new, unseen before samples that are not identical to but have the same characteristics as the input data.

2. Large Language Models (LLMs)

Large Language Models are a subset of deep learning. LLMs refer to large, general-purpose language models designed to process vast amounts of text data and, by using advanced neural network architectures, learn from the patterns and relationships between words, phrases, and sentences in natural language. Large Language Models are trained for general purposes to solve routine language problems such as text classification, question answering, document summary, and text generation. The models can then be fine-tuned to solve specific problems in different areas using small datasets.

Here are some of the main features that define Large Language Models:

a) Size and scale: Large Language Models are trained on a vast amount of text data, encompassing billions of words.

b) Contextual understanding: LLMs use self-attention mechanisms and transformers to identify connections between words and phrases, enabling them to produce contextually relevant responses.

c) General purpose: As the name suggests, LLMs can sufficiently solve routine language problems (see above) due to the commonality in the human language.

d) Pre-training and fine-tuning: After pre-training on a large dataset, LLMs can be further fine-tuned on specific tasks or domains using a smaller dataset. Fine-tuning helps tailor the model’s performance for specific applications, allowing it to produce more accurate and contextually appropriate responses.

a) Size and scale: Large Language Models are trained on a vast amount of text data, encompassing billions of words.

b) Contextual understanding: LLMs use self-attention mechanisms and transformers to identify connections between words and phrases, enabling them to produce contextually relevant responses.

c) General purpose: As the name suggests, LLMs can sufficiently solve routine language problems (see above) due to the commonality in the human language.

d) Pre-training and fine-tuning: After pre-training on a large dataset, LLMs can be further fine-tuned on specific tasks or domains using a smaller dataset. Fine-tuning helps tailor the model’s performance for specific applications, allowing it to produce more accurate and contextually appropriate responses.

While language models are trained on data pulled from various sources such as Wikipedia, web pages, and communities such as Reddit, human capacities are also utilized to fine-tune the data. The training process is similar to how we learn new languages – we need to be guided by teachers and in an environment where we can hear and observe native speakers.

So, what are Large Language Models used for? Use cases include translations, content creation (blog articles, product descriptions, etc), chatbots, summaries of large amounts of text, and many more.

So, what are Large Language Models used for? Use cases include translations, content creation (blog articles, product descriptions, etc), chatbots, summaries of large amounts of text, and many more.

3. Exploring GPT, ChatGPT, and more

So, now that we have understood what Generative AI and LLMs are, it’s time to deep dive into the different language models out there. Over the course of the past couple of months, almost everyone has heard or read something about ChatGPT. So, what does GPT mean exactly? What is ChatGPT, and what does it have to do with OpenAI?

GPT stands for Generative Pre-trained Transformer – a family of neural network models that use the transformer architecture. By harnessing GPT models, applications can generate text and content that closely resemble human creations (images, audio, video, etc.). Moreover, GPT models empower these applications to engage in conversations and answer questions posed by users. Use cases of GPT include:

GPT stands for Generative Pre-trained Transformer – a family of neural network models that use the transformer architecture. By harnessing GPT models, applications can generate text and content that closely resemble human creations (images, audio, video, etc.). Moreover, GPT models empower these applications to engage in conversations and answer questions posed by users. Use cases of GPT include:

– Create marketing content such as social media posts, blog articles, e-books, guidelines, pitch decks, and email marketing campaigns

– Rephrase sentences, change the tonality and styling of paragraphs

– Write and learn code

– Analyze, structure and present data

– Build interactive voice assistants

– Create imagery, audio, and video content

– Rephrase sentences, change the tonality and styling of paragraphs

– Write and learn code

– Analyze, structure and present data

– Build interactive voice assistants

– Create imagery, audio, and video content

3.1 What is ChatGPT?

ChatGPT is an interactive conversational AI model developed by OpenAI, a San Francisco-based artificial intelligence lab founded in 2015. It is built upon the GPT architecture and designed to engage in text-based conversations with users.

ChatGPT is trained on a vast amount of data from the internet, allowing it to understand and generate human-like responses. It can provide coherent and contextually relevant answers to questions, engage in dialogue, and even exhibit a certain level of conversational flow.

Similar to GPT, ChatGPT can be used in a variety of fields and for different purposes. Some of them include:

Similar to GPT, ChatGPT can be used in a variety of fields and for different purposes. Some of them include:

– Customer support/virtual assistants: ChatGPT can be utilized as a virtual assistant providing instant responses to user inquiries and guiding them through a process or a troubleshooting procedure

– Content generation: Marketers and content creators can use ChatGPT to help them in the brainstorming process or in the creation of blog articles, pitch decks, strategies, etc.

– Educational support: ChatGPT can support students by answering questions, explaining concepts, and providing additional learning resources. It can be a study companion, offering interactive quizzes, or assisting with research.

– Information technology and product development: Teams can leverage the power of generative AI to assist in writing code and documentation and to prototype product design rapidly.

– Content generation: Marketers and content creators can use ChatGPT to help them in the brainstorming process or in the creation of blog articles, pitch decks, strategies, etc.

– Educational support: ChatGPT can support students by answering questions, explaining concepts, and providing additional learning resources. It can be a study companion, offering interactive quizzes, or assisting with research.

– Information technology and product development: Teams can leverage the power of generative AI to assist in writing code and documentation and to prototype product design rapidly.

And while ChatGPT has impressed users all over the world with its capabilities and interactiveness, we should all be aware that it may sometimes produce responses that sound plausible but may not always be accurate or factually correct. This is why we should be cautious when using it and always double-check the input it produces.

3.2 What other language models are there?

While GPT has certainly gained much attention over the past couple of months, there are other language models worth looking into. Here is an overview of the most common ones that considers their advantages, disadvantages, and capabilities:

GPT-3

Advantages

Disadvantages

Capabilities

Advantages

Vast amount of pre-training data (45 terabytes), content generation capabilities, supports various tasks through prompt engineering.

Disadvantages

Requires substantial computational resources, limited control over generated output, potential for biased or incorrect responses.

Capabilities

Text completion, language translation, question answering, chatbots, creative writing.

BERT

Advantages

Disadvantages

Capabilities

Advantages

Strong contextual understanding, effective for natural language understanding tasks, supports fine-tuning for specific domains.

Disadvantages

Computationally expensive, not as strong for text generation tasks, lacks long-range dependency understanding.

Capabilities

Named entity recognition, sentiment analysis, text classification, question answering.

Transformer-XL

Advantages

Disadvantages

Capabilities

Advantages

Handles long-range dependencies well, understands context across long documents, better memory capabilities.

Disadvantages

Higher training time and computational requirements, slower inference speed compared to other models.

Capabilities

Language modeling, text generation, summaries, document classification.

GPT-2

Advantages

Disadvantages

Capabilities

Advantages

High-quality text generation, better control over generated output compared to GPT-3, accessible for smaller-scale projects.

Disadvantages

Smaller model size limits the diversity of knowledge, less recent training data compared to newer models.

Capabilities

Creative writing, text completion, dialogue systems, text summaries.

T5

Advantages

Disadvantages

Capabilities

Advantages

Unified model for different NLP tasks, supports multitask learning, efficient for transfer learning.

Disadvantages

Less emphasis on fine-tuning, requires domain-specific training for certain tasks, larger model size.

Capabilities

Text classification, machine translation, document generation, summarization.

3.3 ChatGPT and its implications for content creation and society

Generative AI has taken the world by storm. It has found its place in the domain that was, up until recently, only reserved for humans – the creative realm. As we marvel at the stunning images that Generative AI created for us at a single prompt, as we read through that blog introduction that we couldn’t quite figure out, as we scroll through the thousands of words of research, we feel thankful for the advancement of technology and its ever-growing capacities. But consumed by instant gratification, we forget to look at the bigger picture. With each disruptive technology comes great risk. Now that the technology exists, we start asking ourselves: How will this technology will be integrated into our daily lives? How does it disrupt behavioral patterns? What new behaviors and habits will result from it? Do we have the capacity to handle this disruption? How is the technology affecting companies, and will certain businesses become obsolete? The thing is, we never realize the effects until they have happened. And we never realize just how dangerous this all could be until we feel that what we most value is threatened – safety.

Generative AI is a powerful tool empowering those in higher positions and impeding ordinary workers whose jobs might become obsolete precisely because of this uneven distribution of power. According to a global economics research report from Goldman Sachs, roughly two-thirds of current jobs are exposed to some degree of AI automation. Furthermore, generative AI could substitute up to one-fourth of current work automating around 300 million jobs. But this is not all bad news. While the influence of AI on the labor market is expected to be substantial, the majority of jobs and industries are only partially exposed to automation, indicating that AI is more likely to complement rather than replace them. Automating processes and introducing AI to certain work fields can result in higher productivity, thus increasing time for acquiring new knowledge that can consequently advance output. Academic studies show that employees at early adopter companies observe a significant increase in labor productivity growth, typically resulting in a boost of around 2-3 percentage points per year.

Generative AI really can boost productivity. Back in the day, when you wanted a beautiful flyer or presentation created, you had to spend hours on it. Now there is a variety of tools powered by AI, giving you the possibility to create visually appealing materials at the click of a button and then fine-tune them as you wish. Why spend time researching and gathering resources when you can simply use Generative AI and have your first draft in minutes?

This is where the danger swoops in. Yes, AI can automate a lot of manual and tedious tasks that weren’t stimulating your brain anyhow. And that’s totally fine. By relying so much on Generative AI’s functionalities, people can become passive. They start accepting mediocre content as long as it helps them get the job done faster. They stop thinking critically and start complying with the mass distribution of information that can be biased and potentially harmful. Because who has time to cross-validate every assertion made by ChatGPT? Given the fact that the data it has been trained on only compiles information and research generated till 2021 and that it’s not providing any scientific sources when making statements, ChatGPT should be used cautiously and always as a supplement, never as the primary vehicle. By relying solely on AI to generate content, we risk becoming passive spectators, we risk dimming our creativity, our curiosity, and our eagerness to create and learn. And isn’t that what being a human is about after all?

4. Final thoughts

From generating captivating stories and composing beautiful music to revolutionizing content creation across industries, the potential of generative AI is extraordinary. However, we must be cautious as we embrace this technology. While the possibilities are vast, we must consider the ethics, biases, and risks associated with relying too heavily on AI-generated content. By finding the right balance between human intelligence and the capabilities of machines, we can shape a future where generative AI becomes a powerful tool, enhancing our creativity and amplifying our capabilities. A future where human ingenuity intersects with machine innovation to build a better world. A future where possibilities are endless and utilized for a harmonious co-existence. A future we would be happy to step into.

At simpleshow, we have been using the power of AI for the past 7 years to give our users the ability to create video content at the click of the button while making data safety a top priority. We strongly believe that Generative AI has limitless potential to make communication and work more productive and more efficient. With its capabilities and future development, Generative AI can be used to solve real business challenges.

This is why we are thrilled to announce the launch of the Story Generator (coming soon!). The Story Generator is a custom-built, powerful technology stack that uses text-generative AI, enriched with security and storytelling features to create perfectly tailored explainer video scripts in an instant.

Join us for an exclusive feature premiere event where you will experience this groundbreaking feature live and gain insights into how generative AI is shaping the future of video creation.

Join us for an exclusive feature premiere event where you will experience this groundbreaking feature live and gain insights into how generative AI is shaping the future of video creation.